Trade smarter. Earn more. Stress less.

ANNIE watches the market for you and delivers clear, data-driven buy and sell signals that cut your stress, save you time, and boost your returns.

How It Actually Works

No Crystal Ball Required

ANNIE isn’t guessing the future, it’s learning from the past and acting on what works now. It delivers expert trading decisions built on performance, not predictions.

Instead of chasing headlines or gambling on tomorrow’s winners, ANNIE focuses on:

- Real patterns from real market data

- Strategies that hold up under pressure

- Smart decisions made in real time—not fortune-telling

ANNIE’s neural networks evolve from results, not forecasts—behaving like a seasoned trader making calculated moves, not wild bets.

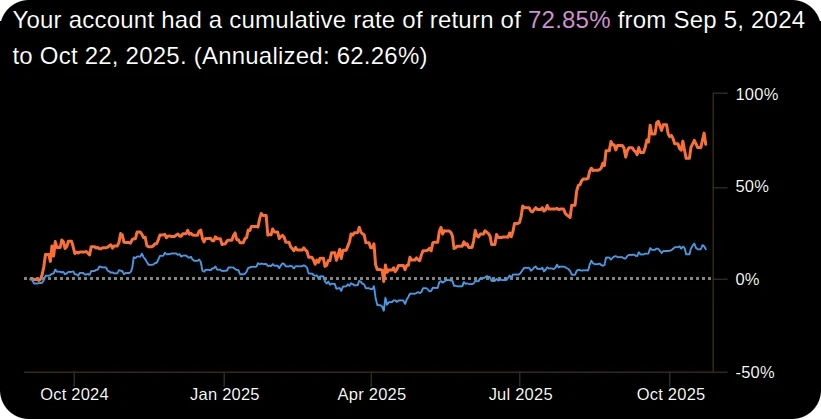

Real-Time AI Trading: Returns proven with real trades executed in a live Schwab IRA account

Important: Past performance is not indicative of future results. Don’t just focus on the gains—review the drawdowns and make sure ANNIE’s risk profile fits your own tolerance.

Your Simple 3-Minute Daily Routine

Every trading day at 3PM Eastern

Receive ANNIE's 5 top picks in your inbox @ 3PM

Review and place trades on your platform (Robinhood, Schwab, etc.)

Click "bought it" so ANNIE tracks your holdings

ANNIE gives exit signals when it's time to sell = Profit!

That's it. The whole routine takes about three minutes a day.

What Makes ANNIE Different

No Emotional Trading

ANNIE’s algorithms eliminate fear, greed, and impulsive decisions. It trades with cold precision—no chasing trends, no second-guessing, just data-driven execution.

No Complex Formulas

You won’t see walls of equations or vague jargon about “price-action volatility patterns.” ANNIE’s results are real, not theoretical. Built on proven AI behavior, not academic fluff.

No Empty Promises

What you see is what ANNIE actually does: live trades, real money, verifiable results. Every buy, sell, or hold signal is visible and backed by performance you can confirm.

Real Results From Real Traders

"I want to compliment you because I’ve been testing your picks since the end of July 2024, and so far, they’ve achieved what I consider to be amazing performance. Around 80% in three and a half months. I’ve tried at least 10-15 of the best AI stock pickers. I can tell you that in terms of performance, yours is currently the one with the highest return! "

Paolo Made 80% in 3.5 Months!

"I want to say I put 1k into Annie a week ago and I'm up 35%. Annie is the truth!"

Instant Profit for Jerome!

As Seen In

Choose Your Plan

Ready to trade smarter, not harder?

FREE Tier

Free Daily Pick

- One AI selected signal daily

- No credit card required

- See how ANNIE works

- Weekly Newsletter and Track Record

Pricing Special

then $19.99/Month or $199.99/Year (Save 17%)

- Access to all 1200+ signals

- ANNIE's Trade-Along

- Build your own watchlist

- Signals for your portfolio

Lock in your pricing-> regularly $49/month